Virtualizing the CO Pod to Remain Relevant —

Rapid advances in data center and cloud-based technologies have put the entire operator ecosystem in motion. Operators and their shareholders want to use the automation advantages of deploying and managing infrastructure and services with modern cloud tooling, but have a large, embedded base of legacy OSSs and BSSs to deal with. Not all operators will change, or can adapt, at the same rate as this technological and cultural disruption, so industry norms and standards bodies are struggling to provide timely and usable solutions to the hard problems facing the industry.

Arthur D. Little, AT&T, Deutsche Telekom, and Telefónica, released a major study, Who Dares Wins!, outlining the vital importance of the telecoms industry moving to a more virtualized, converged and cloud-based architecture for its access networks. Their pioneering approach relies on disaggregating and virtualizing the access network to considerably expand the role of the central office (CO), in order to include fixed and mobile aggregation and edge cloud services. Their recommendations will enable ICT providers to meet 3 current challenges: demand growing faster than revenues, technology convergence, and the increasing value delivered by third parties within their ecosystems.

What Is the POD?

The Central Office pod, or CO pod, is a new design that takes a different approach to operator transformation. It avoids boiling the ocean with long-lead-time, multi-domain-operator transformation programs. Instead, it focuses on reimagining the operator production platform as a cloud-services platform, starting with the access network.

InvisiLight® Solution for Deploying Fiber

April 2, 2022Go to Market Faster. Speed up Network Deployment

April 2, 2022Episode 10: Fiber Optic Closure Specs Explained…

April 1, 2022Food for Thought from Our 2022 ICT Visionaries

April 1, 2022Through clever rearchitecting of industry-standard designs and using CUPS 6 principles, proprietary access equipment is disaggregated and morphed into a small-scale infrastructure cloud platform with specialized access peripherals, which provide an access-as-a-service software platform. However, this design is critically different from its proprietary equivalent in 3 ways:

First, the CO pod can run access as well as other application workloads on the same hardware and software stack. This requires a re-think of networking as something that can be dealt with in an IT way.

Second, it uses the same open-source tools, cloud networking, data center technologies, and service mind-set, that are used by cloud behemoths.

Third, it uses the same DevOps techniques to automate workload and infrastructure management.

The combined effect of these architectural changes is the creation of altogether new possibilities for operator production platform transformation. Access networks and associated IT systems represent a large proportion of industry capital spending, so even small improvements in competitiveness from one operator to the next can boost operator value generation capability and ROI significantly. The CO pod also de-risks potential cloud services and network edge services, because it does not isolate capital investment to only one purpose.

Because there is a constant need to invest in network upgrades and expansions, and since there are regular technology advances that increase the data rates in networks, this creates a safe business plan to deploy cloud infrastructure solely to support network growth.

Additional services on that same cloud are at little or no risk of stranding capital and can be opportunistically explored. In our collective view, because the CO pod provides a safe place that lowers the costs and risks of experimentation and learning cloud concepts in the operator production environment, it is uniquely able to drive service innovation — and therefore, it is a winning design.

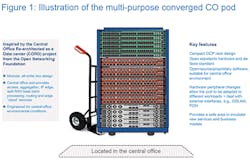

The physical basis of the new design is a modular, multipurpose infrastructure pod engineered for the CO environment. (See Figure 1.) The CO pod is not based on one-off hardware designs from telecoms industry vendors or OEMs; rather, it is based on general-purpose OCP hardware specifications, which are supplied by many vendors and used across many industries — with some diligence to ensure they can work in CO environmental conditions.

It consists of a composable rack of compute, storage, high-speed, programmable switching fabric, as well as special-purpose devices to enable FTTx access, called disaggregated OLTs; all of which are supplied by cloud industry vendors or ODMs.

Like many cloud systems, there is no need for a complete consensus on several important elements, such as deployment topology and the appropriate software environment. There is room for system differentiation, even though these are constructed from similar or even identical components. The ecosystem enjoys multiple options and approaches, which enables higher levels of technology control. These include both open-source/spec and proprietary components, as well as commercial support options, with varying degrees of system integration provided in-house or by independent suppliers.

Workload Convergence

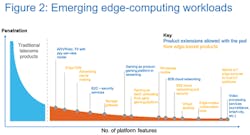

An appropriately equipped CO pod can support multiple workloads. The CO pod can focus (be economically justified) on fixed-network access in FTTx environments, and can also serve as an edge production platform for smart home or office and perimeter security services. The CO pod can support stand-alone edge services, future 5G services, and edge cloud applications. Bringing together mobile and fixed workloads in a common pod allows truly converged services, enabling transparent, access-agnostic traffic aggregation and management.

The CO pod can also be used for much more than simply hosting operator edge functions, including cloud value pools. With the right security and isolation between internal and third-party workloads, spare capacity can be made available to third-party developers. Leveraging the locational advantage of the pod to provide low-latency infrastructure services, developers can deploy latency-sensitive workloads such as augmented reality and localized, data-intensive workload processing as a precursor to ultra-reliable low-latency services in 5G.

Edge Computing Services

Redesigning access networks also provides the tools for operators to innovate services and user experiences — emulating cloud players. Just like a public-cloud DC, the pod is a delimited infrastructure resource, which means it can be managed, provisioned, orchestrated, and patched, in isolation from the rest of the production platform. As a result, it provides a safe place to experiment with new product ideas and software prior to widespread deployment.

New services might use the CO pod’s locational advantage as a feature to meet the needs of latency-sensitive, massive-edge data or cyber-type workloads. (See Figure 2.)

Alternatively, the ability to locally host services can be used to delocalize centralized service delivery platforms, as well as customer and order management systems 7. This can enable a new type of quasi-autonomous production model that contains locally hosted application services; subscribers consume most of their services from CO infrastructure.

This new approach to production can provide a transient or permanent solution to the complexities of dealing with dozens of legacy services and platforms. In addition, under the right conditions, local hosting and other local micro-services can be extended to third parties, based on an open-edge infrastructure services model akin to the public cloud, to cement partnerships and capture additional revenues.

Adopting the CO pod allows operators to not only address the forces of change, but also recast themselves as infrastructure-based service companies built for service differentiation, or ultra- lean, low-cost connectivity providers to edge-based applications. Specifically:

• Aligning operator technical platforms with hyper-scale architectures allows operators to better address data and network growth. It also ameliorates changes in technology, suppliers, and generations of equipment, and reduces the time to market for these types of changes. The CO pod comes along with disaggregation of vertical equipment architectures and remapping of the resultant capabilities to appropriate cloud-native micro-services, merchant silicon, and cloud infrastructure, so the system can be largely supported using typical data center equipment. This helps to bring much-needed competition into the industry supply base.

• Moreover, softwarization of access networks enables the use of cloud-hardened, open-source software to facilitate continuous innovation, closed-loop automation and services mashups. The outcome is a dramatically wider universe of hardware, as well as commercial and open-source software solutions that bring operators closer to the economies of scale enjoyed by web-scale providers.

• Adopting cloud technologies widens the addressable labor pool from which operators can source talent. The cloud paradigm is developing a vast new pool of talent from cloud and security architects, big data engineers, and agile and DevOps specialists. Their tools of choice and skills are vastly different from technical skills previously found at operators. The wider virtualization movement, which caught the industry unprepared as well as under-skilled, provides important lessons. Rather than taking an insular view to talent, the new design recognizes that the industry must pivot towards the same platforms and tooling that are common in the cloud; this will enable the industry to draw on this wider labor pool, which will bring with it vital skills and ways of working to drive individual as well as industry competitiveness.

• Bringing cloud technologies and practices inside the telecoms operator allows the industry to align itself with the cloud paradigm. It is not yet apparent whether operators should replicate existing cloud models for innovation and monetization or find other ways. Whatever the direction, the architecture enables operators to fast-track transformation as well as have a go at innovation, creating meaningful differentiation among operators.

The Case for the CO Pod

The CO pod provides operators with a safe place to virtualize/re-engineer existing services, as well as prototype and test new service ideas, using cloud-hardened development and operations methodologies. The CO pod gives operators a safe place to start over; we see 3 options:

1. Converged Virtualized Access can encompass both fixed and mobile access, harmonizes the way all traffic is treated at the edge, and provides higher throughput at lower total cost of ownership.

2. Autonomous Operator opens a range of options to deploy complementary, highly-automated edge services using the CO pod.

3. Open Operator Platform enables qualified third parties to exploit the edge using pay-per-use models in a model akin to public cloud.

No 2 operators have the same priorities or starting point, so a thorough economic analysis must consider the specific competition, technology roadmap, and debt, for each case individually. Nonetheless, to illustrate the logic and benefits of onboarding the CO pod, Figure 3 shows 3 pathways for product and production platform development.

That said, the strategic benefits of the CO pod architecture require a more holistic view of the changes enabled by the new design, such as agility and efficiency in developing new offerings, channels, operations, and supply chain value. These changes provide the foundations to drive step-change improvements far beyond procurement, including end-to-end production platform efficiency and innovating the operator business model. Nonetheless, such direct comparisons are valuable because the latter is subjective.

Conclusion

While this excerpt introduces some key concepts related to the future of POD virtualization, the full report dives into critical details providers must analyze if/when they decide to adopt this type of transformation. (Visit (https://www.adlittle.com/en/who-dares-wins)

The report stresses that the timing is right to move to this new model, given greater focus on convergence and the impending deployment of FTTx and 5G. Moreover, the architecture provides a safe place for each operator to innovate and explore new services, and, going at their own pace, to transform skills, operations, and business processes, to benefit from improved agility.

In conclusion, the report recognizes that there is still more work to be done. Telecom ecosystems are not yet on a par with IT and web scale data centers, so the industry must work together as a community, in order to move into the future.

Like this Article?

Subscribe to ISE magazine and start receiving your FREE monthly copy today!

This article is an excerpt from the Arthur D. Little report Who Dares Wins!, published September 2019, found at https://www.adlittle.com/en/who-dares-wins. For more information, please email [email protected] or visit https://www.adlittle.com/.

Additional Authors:

Jesús Portal is a Partner at Arthur D. Little, based in Madrid, and is responsible for the Telecommunications, Information Technology, Media and Electronics (TIME) practice in Spain.

About the Author