On the Edge of a Better Normal

5G and Edge Computing —

With the rapid transition to remote work, distance learning, and telehealth, ubiquitous high-speed connectivity is now woven into the fabric of our everyday lives. It has become essential to the way we work, live, and play. The pandemic has only accelerated the demand for higher bandwidth, for both businesses and consumers who need faster, higher-capacity networks with ultra-reliable, ultra-low-latency connectivity. Fast broadband is no longer a nice-to-have, and 5G solutions which can meet that demand are now becoming available.

Also, 5G technology’s low latency and high speed make it ideal for many IoT applications. 5G promises huge data rates between the user, device, and the network. However, the additional bandwidth that 5G unleashes puts more pressure on the processing infrastructure that gathers and processes all of this data to provide useful information or actions. The Multi-access Edge Computing (MEC) initiative anticipated this, recognizing that compute server capabilities placed as close as possible to end users and their devices is required to meet the demand for the low-latency, high-capacity processing that a new class of 5G-enabled applications requires.

MEC initiatives are focused on building out compute capacity in edge networks. With edge computing, cloud computing becomes more distributed, because local processing at the edge is not a replacement for the hyper-scale, data-center-based cloud: it complements it.

InvisiLight® Solution for Deploying Fiber

April 2, 2022Go to Market Faster. Speed up Network Deployment

April 2, 2022Episode 10: Fiber Optic Closure Specs Explained…

April 1, 2022Food for Thought from Our 2022 ICT Visionaries

April 1, 2022When dealing with a massive amount of data produced by sensors, the ability to analyze and filter data locally before sending deeper into the cloud leads to sizable savings in network bandwidth and computing resources. It also makes connected applications more responsive, opening possibilities for new applications that were previously too slow for broad adoption.

3 Challenges

Here are 3 significant challenges that edge computing solves.

Challenge #1. STORAGE

Storage is relatively cheap, but if the price of storage falls by 50%, and we create 100% more data, we still have a problem. Data is only as useful as the knowledge we can extract from it.

Rather than shipping all of that data to a remote storage location for future processing, the ability to process the data as close as possible to the source in real time, compressing it into meaningful knowledge, results in far greater storage efficiency.

Challenge #2. BANDWIDTH

Moving all of the collected data from every device back to a central cloud has a cost. By processing the data as close as possible to the sources, and only passing relevant events to the data center, tremendous savings are gained.

These savings include not only the cost of the bandwidth itself but also the need to build out infrastructure capacity continually to handle it.

Challenge #3. RESPONSIVENESS

Some decision-making is happening in real time, and the data inputs must be processed in milliseconds. Manufacturing control systems, robotics, autonomous vehicles, access controls, live human interaction, AI applications, and augmented reality/virtual reality systems, all require localized processing to deliver an essential level of responsiveness. Centralized clouds, which may be hundreds or thousands of miles from the data source, can be plagued by network congestion or server capacity and scaling issues.

Once edge processing is in place, new applications previously considered unrealistic will emerge. However, this happens only if the industry considers edge-processing capacity as an addition to core capacity — not a replacement. That means software architects have to orchestrate the use of the combined processing capacity of the core and the edge as a whole, and partition their systems to dynamically take advantage of the unique and complementary benefits of each.

But creating more compute capacity at the edge does not just mean building lots of smaller data centers. The scale economies that drive the central cloud’s hyper-scale data center implementation, with racks and racks of servers contained in a single massive secure facility with centralized power and cooling, do not exist at the edge of the network where devices connect. For the edge cloud, integration, robust interconnectivity, and broad physical distribution, are necessary so that operators and their support teams are not overwhelmed by a large number of different elements and physically distributed failure points that are created. Separate routers, IoT gateways, firewalls, and processing servers from multiple different vendors — and the infrastructure and human support they require — are not the answer. Standards such as those developed by ETSI MEC groups can help reduce the complexity of putting multiple physical devices into a functional edge cloud, but only to a degree.

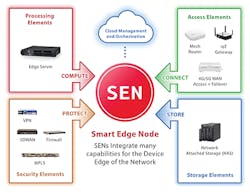

Figure 1. What is a Smart Edge Node (SEN)? The SEN integrates IoT Access Point/Gateway, Edge Server, and security functions into a single device designed for device-edge deployment.

Since processing needs to be as close as possible to where the data is created and consumed — where the devices connect — and we need to keep things manageable, it makes sense to integrate the processing resources within the cellular access points, Wi-Fi routers, and IoT access points/gateways. This new, essential integrated connectivity + computation element that addresses edge cloud creation and MEC architectures is the Smart Edge Node (SEN). (See Figure 1.)

SENs must include not only the end device access/LAN connectivity, but WAN connectivity, and processing resources. And because 5G’s higher radio frequency and shorter reach demands more physical access points than 4G/LTE — more devices in more locations — it is essential that these new SENs be easy to deploy and manage, so that we can not only upgrade existing sites, but also add new ones without the time and effort today’s solutions require.

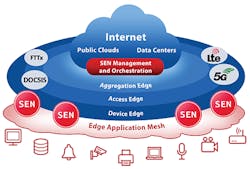

Figure 2. Where is a SEN 5G?

SENs must also have the ability to be part of an application mesh with other SENs, so that the processing capacity of all of the SENs in a given local edge network can be treated as an aggregated whole, with cloud-based orchestration managing the resource pool. If this application mesh can be created wirelessly and cellular WANs are used, the SENs need only have a physical connection to power. All other connectivity may be achieved wirelessly, making SEN deployment incredibly simple. Adding edge cloud processing capacity is as simple as powering up another SEN and adding it to the mesh. (See Figure 2.)

So, what does a SEN require to meet all of these needs? Rich connectivity, both wireless and wired, to start. For LAN connections, dynamic mesh Wi-Fi is important. It provides the reliable connectivity needed to create the edge cloud’s application mesh, as well as the Wi-Fi access for the end-user devices.

Also, given that IoT devices are everywhere, it makes sense to also include wireless IoT connection technologies like Zigbee and Bluetooth/BLE for short, high-speed data transfer, and LoRa for long-distance connections and for locations such as large buildings where Wi-Fi struggles to penetrate walls and floors. Physical Ethernet LAN ports that are compatible with Power over Ethernet (PoE) for IoT devices like IP cameras make good sense. And of course, WAN connectivity, including both physical Ethernet ports as well as wireless cellular connectivity, is a must.

The SEN must also include rich, enterprise-class switching/routing capabilities. Beyond that, a SEN must offer easily-accessible processing capacity. If applications that run in the central data centers could also run on this edge cloud’s application processing mesh, that dramatically improves application processing fluidity between core-cloud and edge-cloud processing resources, potentially allowing them to logically merge into a tightly integrated processing system. Microservices in container-based architectures are an essential part of a successful edge computing strategy, offering the fluidity required at the network edge.

Beyond the connect and compute elements, the SEN must also have security built into its DNA, at the interface, processing, and overall system architecture levels — especially since the additional number of nodes being deployed offers a larger attack surface for bad actors. However, by placing security at the core of SEN capability, the increased number of edge elements shifts from being a potential liability to a position of strength against many kinds of cyberattacks.

Like this Article?

Subscribe to ISE magazine and start receiving your FREE monthly copy today!

When all of this capability can be delivered in a single appliance the size of an enterprise router or even smaller, the SEN emerges as a critical building block of the edge cloud envisioned by the MEC initiative.

Together, 5G and the need for more edge computing are driving yet another shift in the current "normal" computing paradigm, similar to what we saw when PCs replaced dumb terminals and computing became decentralized. 5G and edge computing will drive decentralization of cloud computing, giving businesses cost-effective options for new applications and services, and giving smart buildings and cities a more efficient way to become even smarter.